Here is the guidebook by Jiri Herodek on how to use Amazon Web Services and its machine learning module for sourcing and which pros and cons it has. Before we dive into the process let us explain a couple of notions you need to be familiar with before implementing the whole process into your sourcing strategy.

Amazon Web Services (AWS) is an on-demand cloud platform providing services to individuals and companies as well. AWS comprises various modules like Application Integration, Analytics, AR & VR, Cost Management, Blockchain. Machine Learning, Robotics, Mobile, etc. You can check more information here. If you want to know more I suggest you go through the free online training platform to be more familiar with the technology and basic concepts. We use only Machine learning and S3 storage in our sourcing process. You will find them in the administration of your AWS account.

The advantage of AWS is that you pay as you go. That’s why I pay usually 0.90 USD per month. It depends on the number of predictions (activities) that you do. Predictions can be real-time (synchronous) and batch (asynchronous). I always use real-time predictions since you can see the output immediately and it’s more appropriate for a smaller data sample and it’s also cheaper.

The most important thing that you have to remember is that machine learning models are looking for patterns in data that you upload to the system. You can use this model for the compatibility process in terms of skills, location, or photo.

The output is a JSON file that you import to your Google spreadsheet. The result is information about the candidates and the percentage fit rate.

What you will need for the whole process:

- AWS Account – it’s for free (you pay only for made predictions) so unless it’s for real-time predictions.

- Google Account – You will use Google Spreadsheets for doing your searches and for importing the results into it.

- Xpath Helper – is a chrome extension that you can download here. The chrome extension extracts, edits, and evaluates XPath queries on any webpage.

- Blockspring – is an application that you can use in your spreadsheet for importing data from various sources. Let’s say it is an API connector. There is also a chrome extension that you can use for building your own apps.

The process includes the following parts and reflects the stages of the ML (machine learning) model in the setting of the AWS console:

- Data Preparation (Google Spreadsheet with data about the candidates).

- Training Data Source (uploading a CSV file with desired matching criteria to your S3 storage).

- Create a Machine Learning Model (ML) – you have to choose the right type of predictions, file type, etc.

- Review the ML Model – you just check the predictive performance bar which should be around 90-100%.

- Set a score Threshold – here you usually don’t change anything because it would break the whole model. The threshold indicates how many positive and negative errors might occur.

- Use the ML Model to generate Predictions.

Clean up data and import JSON to the spreadsheet – here you should check if Google spreadsheet allows you to import JSON from any link and launch the code in the spreadsheet. You can download some open-source code from here.

#1 Playbook: The Ultimate Guide to Sourcing on Social Media

Subscribe to AmazingHiring’s sourcing newsletter and get the #1 Playbook: The Ultimate Guide to Sourcing on Social Media

Now let’s dive into the process and what the key stages look like:

1) Boolean search

The most important is the data preparation phase since you need to have all data in one tab and candidates you want to compare with either skill, location, or photo in the second tab.

Start with creating a boolean generator and use this one as a solid foundation. Except for this boolean generator you have to add to the spreadsheet also some other factors like a search engine that you want to use and website from which you want to bring your data (LinkedIn, Angellist, etc). You have to put to the list also the field website factor web crawlers for Google or Bing and then combine it with data obtained from x-ray searches for LinkedIn or Angellist etc. and import them to your google spreadsheet via formulas like IMPORT etc. You can find how to do it here.

Example:

=CONCATENATE (IF(B14="BING";"https://www.bing.com/search?q=";"https://www.google.com/search?num=50&safe=off&q=");IF( B15="LINKEDIN";"site%3Alinkedin.com%2Fin+"; "site%3Angel.co+"))

When it comes to the searches 1-16 we just combine the proper website factor, for example, Google+LinkedIn with proper skills, city, and country and we use function CONCATENATE for this so for search 1 it looks like this:

=CONCATENATE(B17;B6;" AND ";B7; " AND "; B8; " AND "; B10)

2) Candidates

In this tab, we have only a reference on the previous tab of our boolean generator and our searcher 1-16. There is inserted a simple condition that looks like this:

=IFS(A3="Search 1";'Boolean search'!B20;A3="Search 2";'Boolean search'!B21;A3="Search 3";'Boolean search'!B22;A3="Search 4";'Boolean search'!B23;A3="Search 5";'Boolean search'!B24;A3="Search 6";'Boolean search'!B25;A3="Search 7";'Boolean search'!B26;A3="Search 8";'Boolean search'!B27;A3="Search 9";'Boolean search'!B28;A3="Search 10";'Boolean search'!B29;A3="Search 11";'Boolean search'!B30;A3="Search 12";'Boolean search'!B31;A3="Search 13";'Boolean search'!B32;A3="Search 13";'Boolean search'!B32;A3="Search 14";'Boolean search'!B33;A3="Search 15";'Boolean search'!B34;A3="Search 16";'Boolean search'!B35)

Whenever you change your search the crawler will bring candidates from a given search engine and desired social network or source in general and candidates will pop up.

3) Matching criteria

Finally, you need to have the third tab with that matching criteria. Use skills or location. You can use assign the value 100% or 50%. Upload this data to AWS as a CSV file and create your machine learning model.

4) Final step

Create a new tab in your spreadsheet where will you import your results. Check your settings that it is allowed to import JSON files from AWS. This repository on GitHub shows you how to import JSON functionalities.

This method is useful to test your sourcing hypothesis in terms of the available talent pool and market size. The system will automatically source for you and the whole process is scalable and easy to use. The only drawback is the limit of matching candidates with a location. And it works best for IT roles.

Sourcing with Amazon Web Services

The whole idea is very simple. You need to have extracted data about your candidates (names, LinkedIn profiles, titles, etc) in your Google Spreadsheet. For this data mining process, you can use automated scripts that will extract data from Google or Bing in XML format and import them to your Google spreadsheet. The original Spreadsheet can look like this one.

Afterward, you need to create some automated scripts in your google spreadsheet to manipulate data in your Google spreadsheet whenever you change the source of data (Google or Bing) and social network (LinkedIn, Angellist, GitHub, etc.). The whole sourcing process is very quick and smooth. You can have an instant overview of the talent pool in various countries and the matching score and the script will bring you data about the candidates on LinkedIn (Name, LinkedIn URL, etc.) for example on Google and Bing. So that you can distinguish the difference between which data are indexed by Google and which are indexed by Bing and you have a more comprehensive overview of the market.

Create a machine learning model in your AWS account that will be matching your candidates with the given criteria. AWS allows you to match candidates with location and skills.

For instance, we want to know the percentage rate fit between a particular candidate and the role. Let’s say we have 3 skills (Javascript, React, and Angular) that are crucial for our role and javascript is a must-have skill (100%), React is important for 50%, and angular is nice to have (25%). We assign a percentage rate to each skill based on the importance. When it comes to the location download the list of the cities (counties).

Finding this article insightful?

Top Recruiters create content for AmazingHiring.

Subscribe to receive 1 curated newsletter per month with our latest blog posts.

Finding this article insightful?

Top Recruiters create content for AmazingHiring.

Subscribe to receive 1 curated newsletter per month with our latest blog posts.

In this article we have mentioned GitHub, the largest web-based hosting service for IT projects. Here you can learn more about the platform and how it can be used by tech recruiters to source for candidates.

80% of Tech candidates are passive.

Level up your outbound sourcing strategy.Ready to start sourcing IT candidates?

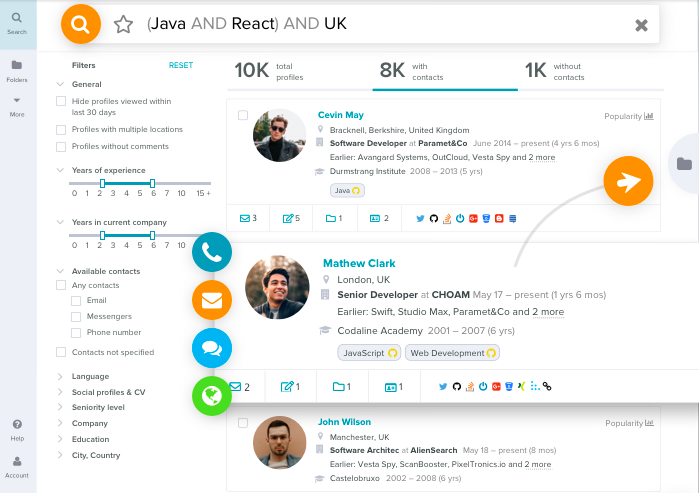

AmazingHiring is an AI-based solution for tech recruiters to source passive IT candidates across the web. It aggregates IT profiles from 50+ networks like GitHub, Stackoverflow, Facebook, Kaggle, etc. using Boolean operators, and provide recruiters with candidates’ professional background, contacts, skills.

AmazingHiring empowers recruiters to double their pipeline and improve their sourcing metrics.

#1 Playbook: The Ultimate Guide to Sourcing on Social Media

Subscribe to AmazingHiring’s sourcing newsletter and get the #1 Playbook: The Ultimate Guide to Sourcing on Social Media

Social media sites are valuable sources of information for any recruiter. This is especially handy when you have to navigate in a highly competitive niche like software development. There is a massive shortage of talent in this space so that to find and hire the right candidate, you have to be very fast, proactive, and […]

Let’s face it. Job boards suck. In 2020, there is a zero chance that top engineers will pay attention to your job listings unless you are a tech giant like Google or Facebook. Startups and even established, yet not-so-famous companies can implement the smart recruiting approach to compete for top tech talent. But how to […]

Sourcing is the key in the hiring process, as it helps the recruiter to find relevant information about the right candidates with experience and skills a company needs. With sourcing, it’s easier to identify if the candidate is actively looking for a job or not. At first, sourcing may seem like a very overwhelming and […]